OperatorFabric Getting Started

1. Prerequisites

To use OperatorFabric, you need a linux OS with the following:

-

Docker install with 4Gb of space

-

16Gb of RAM minimal, 32 Gb recommended

2. Install and run server

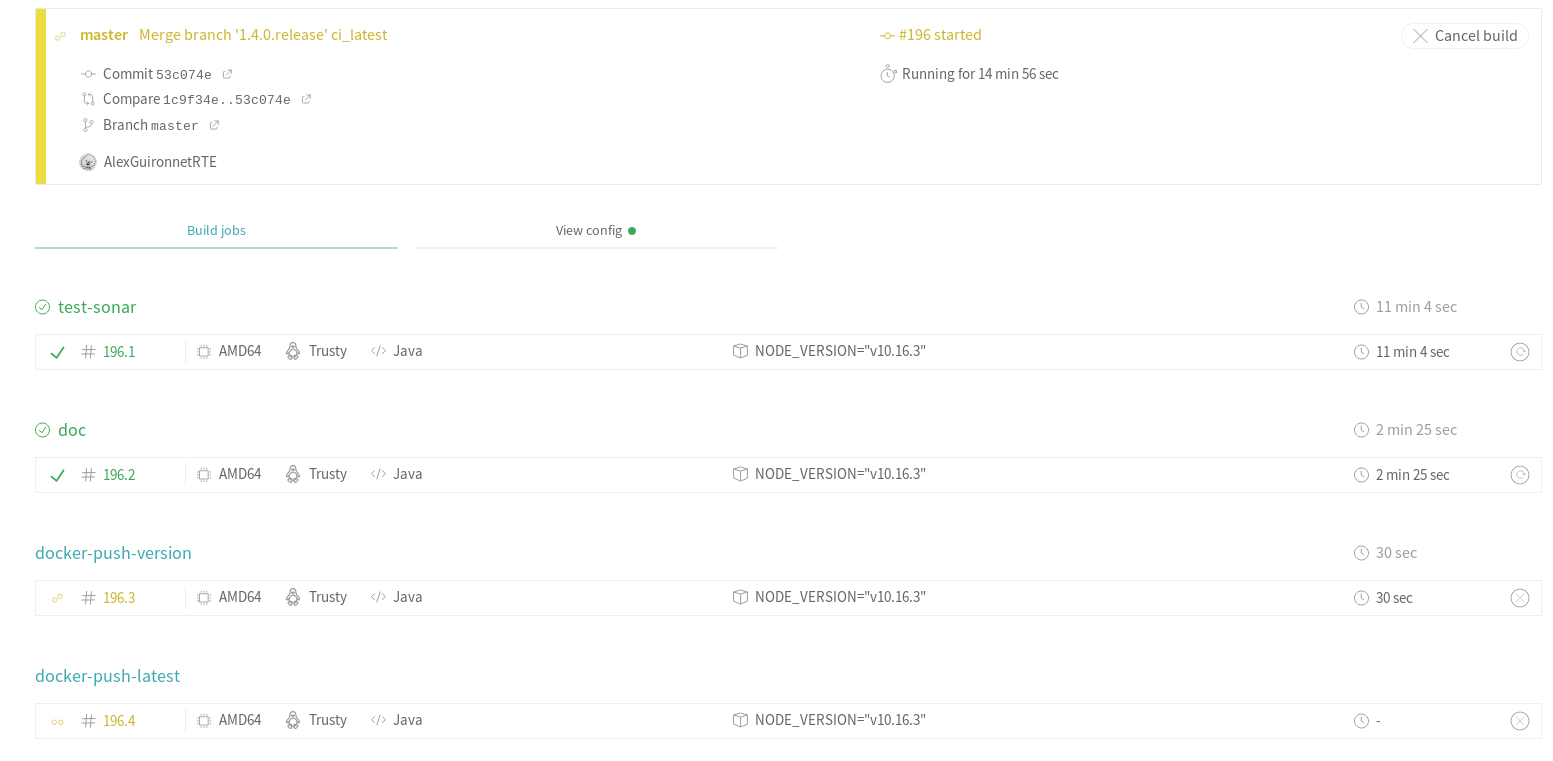

To start OperatorFabric, you first need to clone the getting started git

git clone https://github.com/opfab/operatorfabric-getting-started.git

Launch the startserver.sh in the server directory. You need to wait for all the services to start (it usually takes one minute to start), it is done when no more logs are written on the output (It could continue to log but slowly).

Test the connection to the UI: to connect to OperatorFabric, open in a browser the following page: localhost:2002/ui/ and use operator1 as login and test as password.

After connection, you should see the following screen

To stop the server (if you start it in background), use:

docker-compose down &

3. Examples

For each example, useful files and scripts are in the directory client/exampleX.

All examples assume you connect to the server from localhost (otherwise change the provided scripts)

3.1. Example 1: Send and update a basic card

Go in directory client/example1 and send a card, using the provided script :

./sendCard.sh card.json

The result should be a 201 Http status, and a json object such as:

{"count":1,"message":"All pushedCards were successfully handled"}See the result in the UI, you should see a card, if you click on it you’ll see the detail

3.1.1. Anatomy of the card :

A card is containing information regarding the publisher, the recipients, the process, the data to show…

More information can be found in the Card Structure section of the reference documentation.

{

"publisher" : "message-publisher",

"processVersion" : "1",

"process" :"defaultProcess",

"processInstanceId" : "hello-world-1",

"state" : "messageState",

"groupRecipients": ["Dispatcher"],

"severity" : "INFORMATION",

"startDate" : 1553186770681,

"summary" : {"key" : "defaultProcess.summary"},

"title" : {"key" : "defaultProcess.title"},

"data" : {"message" :"Hello World !!! That's my first message"}

}| If you open the json file of the card, you will see '${current_date_in_milliseconds_from_epoch}' for the field 'startDate'. We have used this so that the date of the card is the current day (or the next day in some other examples). Indeed, in the shell script that sends the card, you will see that we create an environment variable with the current date which is then injected into the json file. |

3.1.2. Update the card

We can send a new version of the card (updateCard.json):

-

change the message, field data.message in the JSON File

-

the severity , field severity in the JSON File

{

"publisher" : "message-publisher",

"processVersion" : "1",

"process" :"defaultProcess",

"processInstanceId" : "hello-world-1",

"state" : "messageState",

"groupRecipients": ["Dispatcher"],

"severity" : "ALARM",

"startDate" : 1553186770681,

"summary" : {"key" : "defaultProcess.summary"},

"title" : {"key" : "defaultProcess.title"},

"data" : {"message" :":That's my second message"}

}You can send the updated card with:

./sendCard.sh cardUpdate.json

The card should be updated on the UI.

3.2. Example 2: Publish a new bundle

The way the card is display in the UI is defined via a Bundle containing templates and process description.

The bundle structure is the following:

├── css : stylesheets files

├── i18n : internalization files

└── template :

├── en : handlebar templates for detail card rendering

├── ....

config.json : process description and global configuration

The bundle is provided in the bundle directory of example2. It contains a new version of the bundle used in example1.

We just change the template and the stylesheet instead of displaying:

Message : The message

we display:

You received the following message The message

If you look at the template file (template/en/template.handlebars):

<h2> You received the following message </h2>

{{card.data.message}}In the stylesheet css/style.css we just change the color value to red (#ff0000):

h2{

color:#ff0000;

font-weight: bold;

}The global configuration is defined in config.json :

{

"id":"defaultProcess",

"version":"2",

"states":{

"messageState" : {

"templateName" : "template",

"styles" : [ "style" ]

}

}

}To keep the old bundle, we create a new version by setting version to 2.

3.2.1. Package your bundle

Your bundle need to be package in a tar.gz file, a script is available

./packageBundle.sh

A file name bundle.tar.gz will be created.

3.2.2. Get a Token

To send the bundle you need to be authenticated. To get a token you can source the provided script:

source ./getToken.sh

This will run the following command:

curl -s -X POST -d "username=admin&password=test&grant_type=password&client_id=opfab-client" http://localhost:2002/auth/token

This should return a JSON a response like this:

{"access_token":"eyJhbGciOiJSUzI1NiIsInR5cCIgOiAiSldUIiwia2lkIiA6ICJSbXFOVTNLN0x4ck5SRmtIVTJxcTZZcTEya1RDaXNtRkw5U2NwbkNPeDBjIn0.eyJqdGkiOiIzZDhlODY3MS1jMDhjLTQ3NDktOTQyOC1hZTdhOTE5OWRmNjIiLCJleHAiOjE1NzU1ODQ0NTYsIm5iZiI6MCwiaWF0IjoxNTc1NTQ4NDU2LCJpc3MiOiJodHRwOi8va2V5Y2xvYWs6ODA4MC9hdXRoL3JlYWxtcy9kZXYiLCJhdWQiOiJhY2NvdW50Iiwic3ViIjoiYTNhM2IxYTYtMWVlYi00NDI5LWE2OGItNWQ1YWI1YjNhMTI5IiwidHlwIjoiQmVhcmVyIiwiYXpwIjoib3BmYWItY2xpZW50IiwiYXV0aF90aW1lIjowLCJzZXNzaW9uX3N0YXRlIjoiODc3NzZjOTktYjA1MC00NmQxLTg5YjYtNDljYzIxNTQyMDBhIiwiYWNyIjoiMSIsInJlYWxtX2FjY2VzcyI6eyJyb2xlcyI6WyJvZmZsaW5lX2FjY2VzcyIsInVtYV9hdXRob3JpemF0aW9uIl19LCJyZXNvdXJjZV9hY2Nlc3MiOnsiYWNjb3VudCI6eyJyb2xlcyI6WyJtYW5hZ2UtYWNjb3VudCIsIm1hbmFnZS1hY2NvdW50LWxpbmtzIiwidmlldy1wcm9maWxlIl19fSwic2NvcGUiOiJlbWFpbCBwcm9maWxlIiwic3ViIjoiYWRtaW4iLCJlbWFpbF92ZXJpZmllZCI6ZmFsc2UsInByZWZlcnJlZF91c2VybmFtZSI6ImFkbWluIn0.XMLjdOJV-A-iZrtq7sobcvU9XtJVmKKv9Tnv921PjtvJ85CnHP-qXp2hYf5D8TXnn32lILVD3g8F9iXs0otMAbpA9j9Re2QPadwRnGNLIzmD5pLzjJ7c18PWZUVscbaqdP5PfVFA67-j-YmQBwxiys8psF8keJFvmg-ExOGh66lCayClceQaUUdxpeuKFDxOSkFVEJcVxdelFtrEbpoq0KNPtYk7vtoG74zO3KjNGrzLkSE_e4wR6MHVFrZVJwG9cEPd_dLGS-GmkYjB6lorXPyJJ9WYvig56CKDaFry3Vn8AjX_SFSgTB28WkWHYZknTwm9EKeRCsBQlU6MLe4Sng","expires_in":36000,"refresh_expires_in":1800,"refresh_token":"eyJhbGciOiJIUzI1NiIsInR5cCIgOiAiSldUIiwia2lkIiA6ICIzZjdkZTM0OC05N2Q5LTRiOTUtYjViNi04MjExYTI3YjdlNzYifQ.eyJqdGkiOiJhZDY4ODQ4NS1hZGE0LTQwNWEtYjQ4MS1hNmNkMTM2YWY0YWYiLCJleHAiOjE1NzU1NTAyNTYsIm5iZiI6MCwiaWF0IjoxNTc1NTQ4NDU2LCJpc3MiOiJodHRwOi8va2V5Y2xvYWs6ODA4MC9hdXRoL3JlYWxtcy9kZXYiLCJhdWQiOiJodHRwOi8va2V5Y2xvYWs6ODA4MC9hdXRoL3JlYWxtcy9kZXYiLCJzdWIiOiJhM2EzYjFhNi0xZWViLTQ0MjktYTY4Yi01ZDVhYjViM2ExMjkiLCJ0eXAiOiJSZWZyZXNoIiwiYXpwIjoib3BmYWItY2xpZW50IiwiYXV0aF90aW1lIjowLCJzZXNzaW9uX3N0YXRlIjoiODc3NzZjOTktYjA1MC00NmQxLTg5YjYtNDljYzIxNTQyMDBhIiwicmVhbG1fYWNjZXNzIjp7InJvbGVzIjpbIm9mZmxpbmVfYWNjZXNzIiwidW1hX2F1dGhvcml6YXRpb24iXX0sInJlc291cmNlX2FjY2VzcyI6eyJhY2NvdW50Ijp7InJvbGVzIjpbIm1hbmFnZS1hY2NvdW50IiwibWFuYWdlLWFjY291bnQtbGlua3MiLCJ2aWV3LXByb2ZpbGUiXX19LCJzY29wZSI6ImVtYWlsIHByb2ZpbGUifQ.sHskPtatqlU9Z8Sfq6yvzUP_L6y-Rv26oPpykyPgzmk","token_type":"bearer","not-before-policy":0,"session_state":"87776c99-b050-46d1-89b6-49cc2154200a","scope":"email profile"}Your token is the access_token value in the JSON, which the script will export to a $token environment variable.

The sendBundle.sh script below will use of this variable.

| The token will be valid for 10 hours, after you will need to ask for a new one. |

3.2.3. Send the bundle

Executing the sendBundle.sh script will send the bundle.

You can now execute the script, it will send the bundle.

./sendBundle.sh

You should received the following JSON in response, describing your bundle.

{"id":"defaultProcess","name":null,"version":"2","uiVisibility":null,"states":{"messageState":{"responseData":null,"acknowledgementAllowed":true,"color":null,"name":null,"userCard":null,"templateName":"template","styles":["style"],"acknowledgmentAllowed":true,"response":null}}}3.2.4. Send a card

You can send the following card to test your new bundle:

{

"publisher" : "message-publisher",

"processVersion" : "2",

"process" :"defaultProcess",

"processInstanceId" : "hello-world-1",

"state": "messageState",

"groupRecipients": ["Dispatcher"],

"severity" : "INFORMATION",

"startDate" : 1553186770681,

"summary" : {"key" : "defaultProcess.summary"},

"title" : {"key" : "defaultProcess.title"},

"data" : {"message":"Hello world in new version"}

}To use the new bundle, we set processVersion to "2"

To send the card:

./sendCard.sh

You should see in the UI the detail card with the new template.

3.3. Example 3: Process with state

For this example, we will set the following process:

-

Step 1: A critical situation arises on the High Voltage grid

-

Step 2: The critical situation evolve

-

Step 3: The critical situation ends

To model this process in OperatorFabric, we will use a "Process" with "States", we will model this in the config.json

of the bundle:

{

"id":"criticalSituation",

"name": "process.name",

"version":"1",

"states":{

"criticalSituation-begin" : {

"templateName" : "criticalSituationTemplate",

"styles" : [ "style" ],

"acknowledgmentAllowed": "Always"

},

"criticalSituation-update" : {

"templateName" : "criticalSituationTemplate",

"styles" : [ "style" ],

"acknowledgmentAllowed": "Always"

},

"criticalSituation-end" : {

"templateName" : "endCriticalSituationTemplate",

"styles" : [ "style" ],

"acknowledgmentAllowed": "Always"

}

}

}You can see in the JSON we define a process name "criticalSituation" with 3 states: criticalSituation-begin, criticalSituation-update and criticalSituation-end. For each state we define a title for the card, and the template a stylesheets to use.

The title is a key which refer to an i18n found in the corresponding i18n repository:

{

"process": {

"name": "Critical situation process"

},

"criticalSituation-begin":{

"title":"CRITICAL SITUATION",

"summary":" CRITICAL SITUATION ON THE GRID , SEE DETAIL FOR INSTRUCTION"

},

"criticalSituation-update":{

"title":"CRITICAL SITUATION - UPDATE",

"summary":" CRITICAL SITUATION ON THE GRID , SEE DETAIL FOR INSTRUCTION"

},

"criticalSituation-end":{

"title":"CRITICAL SITUATION - END",

"summary":" CRITICAL SITUATION ENDED"

}

}The templates can be found in the template directory.

We can now send cards and simulate the process, first we send a card at the beginning of the critical situation:

{

"publisher" : "alert-publisher",

"processVersion" : "1",

"process" :"criticalSituation",

"processInstanceId" : "alert1",

"state": "criticalSituation-begin",

"groupRecipients": ["Dispatcher"],

"severity" : "ALARM",

"startDate" : 1553186770681,

"summary" : {"key" : "criticalSituation-begin.summary"},

"title" : {"key" : "criticalSituation-begin.title"},

"data" : {"instruction":"Critical situation on the grid : stop immediatly all maintenance on the grid"}

}The card refers to the process "criticalSituation" as defined in the config.json, the state attribute is put to "criticalSituation-begin" which is the first step of the process, again as defined in the config.json. The card can be sent via provided script :

./sendCard.sh card.json

Two other card have be provided to continue the process

-

cardUpdate.json: the state is criticalSituation-update

-

cardEnd.json: the state is criticalSituation-end and severity set to "compliant"

You can send these cards:

./sendCard.sh cardUpdate.json

./sendCard.sh cardEnd.json

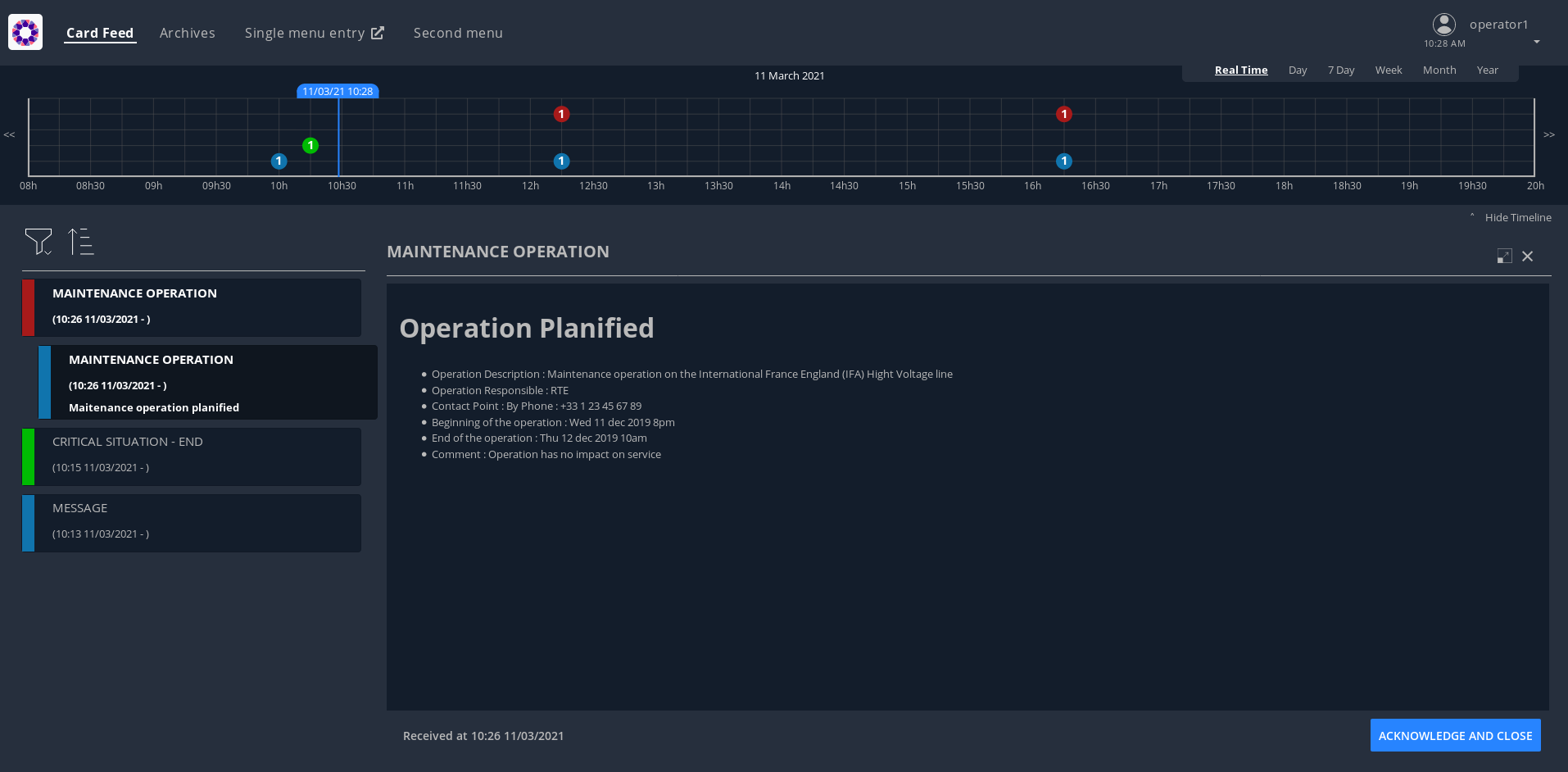

3.4. Example 4: Time Line

To view the card in the time line, you need to set times in the card using timeSpans attributes as in the following card:

{

"publisher" : "scheduledMaintenance-publisher",

"processVersion" : "1",

"process" :"maintenanceProcess",

"processInstanceId" : "maintenance-1",

"state": "planned",

"groupRecipients": ["Dispatcher"],

"severity" : "INFORMATION",

"startDate" : 1553186770681,

"summary" : {"key" : "maintenanceProcess.summary"},

"title" : {"key" : "maintenanceProcess.title"},

"data" : {

"operationDescription":"Maintenance operation on the International France England (IFA) Hight Voltage line ",

"operationResponsible":"RTE",

"contactPoint":"By Phone : +33 1 23 45 67 89 ",

"comment":"Operation has no impact on service"

},

"timeSpans" : [

{"start" : 1576080876779},

{"start" : 1576104912066}

]

}For this example, we use a new publisher called "scheduledMaintenance-publisher". You won’t need to post the corresponding bundle to the businessconfig service as it has been loaded in advance to be available out of the box (only for the getting started). If you want to take a look at its content you can find it under server/businessconfig-storage/scheduledMaintenance-publisher/1.

Before sending the provided card provided, you need to set the good time values as epoch (ms) in the json. For each value you set, you will have a point in the timeline. In our example, the first point represent the beginning of the maintenance operation, and the second the end of the maintenance operation.

To get the dates in Epoch, you can use the following commands:

For the first date:

date -d "+ 60 minutes" +%s%N | cut -b1-13

And for the second

date -d "+ 120 minutes" +%s%N | cut -b1-13

To send the card use the provided script in example4 directory

./sendCard.sh card.json

A second card (card2.json) is provided as example, you need again to set times values in the json file and then send it

./sendCard.sh card2.json

This time the severity of the card is ALERT, you should see the point in red in the timeline

3.5. Example 5: Card routing mechanism

3.5.1. Card sent to a group

As we saw previously, if a card is sent to a group, then you only need to be a member of the group to receive it.

3.5.2. Card sent to an entity

If a card is sent to an entity, then you must be a member of this entity and have the process / state of the card within the user’s perimeter. As the perimeters are attached to groups, the user must therefore be a member of a group attached to this perimeter.

Let’s send this card :

{

"publisher" : "message-publisher",

"processVersion" : "1",

"entityRecipients" : ["ENTITY1"],

"process" :"defaultProcess",

"processInstanceId" : "cardExample5",

"state" : "messageState",

"severity" : "INFORMATION",

"startDate" : 1553186770681,

"summary" : {"key" : "defaultProcess.summary"},

"title" : {"key" : "defaultProcess.title"},

"data" : {"message" : "Hello World !!! Here is a message for ENTITY1"}

}Use the provided script :

./sendCard.sh cardSentToEntity.json

The result should be a 201 Http status, and a json object such as:

{"count":1,"message":"All pushedCards were successfully handled"}See the result in the UI, you should not see the card.

Now let’s create this perimeter :

{

"id" : "getting-startedPerimeter",

"process" : "defaultProcess",

"stateRights" : [

{

"state" : "messageState",

"right" : "Receive"

}

]

}You can use this command line :

curl -X POST http://localhost:2103/perimeters -H "Content-type:application/json" -H "Authorization:Bearer $token" --data @perimeter.json

or use the provided script :

./createPerimeter.sh perimeter.json

The result should be a 201 Http status, and a json object such as:

{"id":"getting-startedPerimeter","process":"defaultProcess","stateRights":[{"state":"messageState1","right":"Receive"},{"state":"messageState2","right":"ReceiveAndWrite"}]}Now let’s attach this perimeter to the Dispatcher group. You can use this command line :

curl -X PUT http://localhost:2103/perimeters/getting-startedPerimeter/groups -H "Content-type:application/json" -H "Authorization:Bearer $token" --data "[\"Dispatcher\"]"

or use the provided script :

./putPerimeterForGroup.sh

The result should be a 200 Http status.

Now, if you refresh the UI or send again the card, you should see the card.

3.5.3. Card sent to a group and an entity

If a card is sent to a group and an entity, then there are 2 possibilities to receive this card :

-

First possibility : the user is both a member of this entity and a member of this group.

Let’s send this card (for ENTITY1 and Dispatcher group) :

{

"publisher" : "message-publisher",

"processVersion" : "1",

"entityRecipients" : ["ENTITY1"],

"process" :"defaultProcess",

"processInstanceId" : "cardExample5_1",

"state": "messageState2",

"groupRecipients": ["Dispatcher"],

"severity" : "INFORMATION",

"startDate" : 1553186770681,

"summary" : {"key" : "defaultProcess.summary"},

"title" : {"key" : "defaultProcess.title"},

"data" : {"message" : "Hello World !!! Here is a message for ENTITY1 and group Dispatcher - process/state not in operator1 perimeter "}

}Use the provided script :

./sendCard.sh cardSentToEntityAndGroup_1.json

The result should be a 201 Http status, and a json object such as:

{"count":1,"message":"All pushedCards were successfully handled"}See the result in the UI, you should see the card.

-

Second possibility : the user is a member of this entity and have the process/state of the card within its perimeter. As the perimeters are attached to groups, the user must therefore be a member of a group attached to this perimeter.

Let’s send this card (for ENTITY1 and Planner group) :

{

"publisher" : "message-publisher",

"processVersion" : "1",

"entityRecipients" : ["ENTITY1"],

"process" :"defaultProcess",

"processInstanceId" : "cardExample5_2",

"state": "messageState",

"groupRecipients": ["Planner"],

"severity" : "INFORMATION",

"startDate" : 1553186770681,

"summary" : {"key" : "defaultProcess.summary"},

"title" : {"key" : "defaultProcess.title"},

"data" : {"message" : "Hello World !!! Here is a message for ENTITY1 and group Planner - process/state in operator1 perimeter "}

}Use the provided script :

./sendCard.sh cardSentToEntityAndGroup_2.json

The result should be a 201 Http status, and a json object such as:

{"count":1,"message":"All pushedCards were successfully handled"}See the result in the UI, you should see the card.

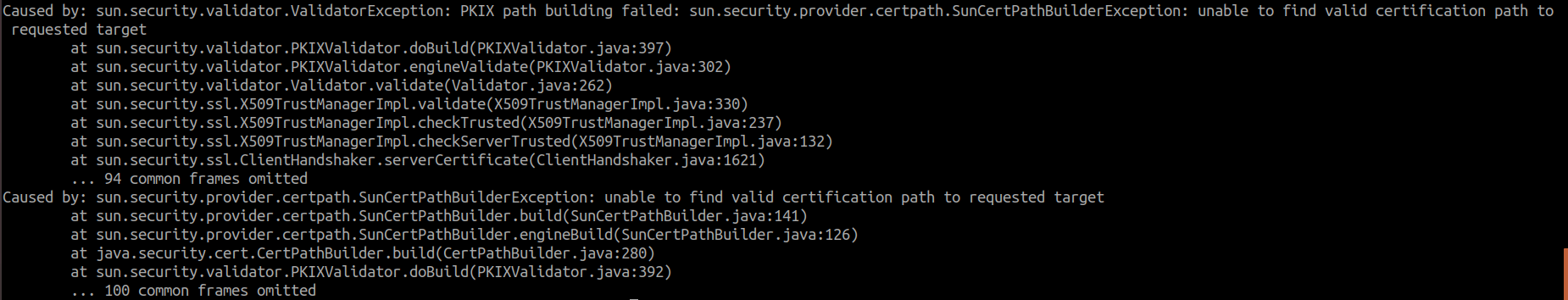

4. Troubleshooting

4.1. My bundle is not loaded

The server send a {"status":"BAD_REQUEST","message":"unable to open submitted

file","errors":["Error detected parsing the header"]}, despite correct http

headers.

The uploaded bundle is corrupted. Test your bundle in a terminal (Linux solution).

Example for a bundle archive named MyBundleToTest.tar.gz giving the

mentioned error when uploaded :

tar -tzf MyBundleToTest.tar.gz >/dev/null tar: This does not look like a tar archive tar: Skipping to next header tar: Exiting with failure status due to previous errors

4.2. I can’t upload my bundle

The server responds with a message like the following:

{"status":"BAD_REQUEST","message":"unable to open submitted

file","errors":["Input is not in the .gz format"]}

The bundle has been compressed using an unmanaged format.

4.2.1. Format verification

4.2.1.1. Linux solution

Command line example to verify the format of a bundle archive named

MyBundleToTest.tar.gz(which gives the mentioned error when uploaded):

tar -tzf MyBundleToTest.tar.gz >/dev/null

which should return in such case the following messages:

gzip: stdin: not in gzip format tar: Child returned status 1 tar: Error is not recoverable: exiting now

4.3. My bundle is rejected due to internal structure

The server sends {"status":"BAD_REQUEST","message":"Incorrect inner file

structure","errors":["$OPERATOR_FABRIC_INSTANCE_PATH/d91ba68c-de6b-4635-a8e8-b58

fff77dfd2/config.json (Aucun fichier ou dossier de ce type)"]}

Where $OPERATOR_FABRIC_INSTANCE_PATH is the folder where businessconfig files are

stored server side.

4.4. No template display

The server send 404 for requested template with a response like

{"status":"NOT_FOUND","message":"The specified resource does not

exist","errors":["$OPERATOR_FABRIC_INSTANCE_PATH/businessconfig-storage/BUNDLE_TEST/1/te

mplate/fr/template1.handlebars (Aucun fichier ou dossier de ce type)"]}

4.4.1. Verification

The previous server response is return for a request like:

http://localhost:2002/businessconfig/BUNDLE_TEST/templates/template1?locale=fr&version

=1

The bundle is lacking localized folder and doesn’t contain the requested localization.

If you have access to the businessconfig micro service source code you

should list the content of

$THIRDS_PROJECT/build/docker-volume/businessconfig-storage

OperatorFabric Architecture

5. Introduction

The aim of this document is to describe the architecture of the solution, first by defining the business concepts it deals with and then showing how this translates into the technical architecture.

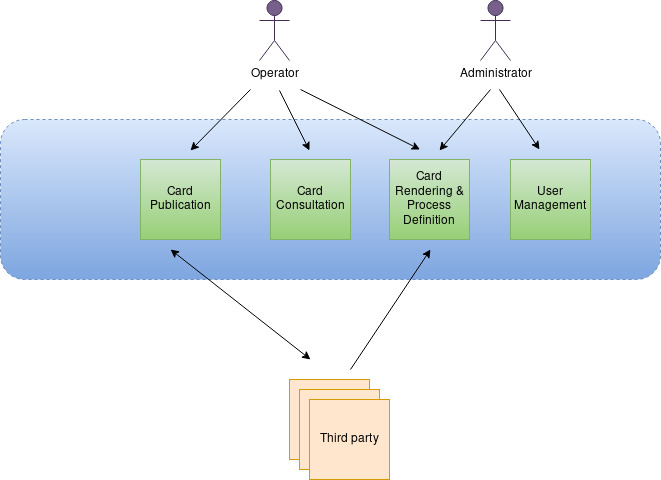

6. Business Architecture

OperatorFabric is based on the concept of cards, which contain data regarding events that are relevant for the operator. A third party tool publishes cards and the cards are received on the screen of the operators. Depending on the type of the cards, the operator can send back information to the third party via a "response card".

6.1. Business components

To do the job, the following business components are defined :

-

Card Publication : this component receives the cards from third-party tools or users

-

Card Consultation : this component delivers the cards to the operators and provide access to all cards exchanged (archives)

-

Card rendering and process definition : this component stores the information for the card rendering (templates, internationalization, …) and a light description of the process associate (states, response card, …). This configuration data can be provided either by an administrator or by a third party tool.

-

User Management : this component is used to manage users, groups, entities and perimeters.

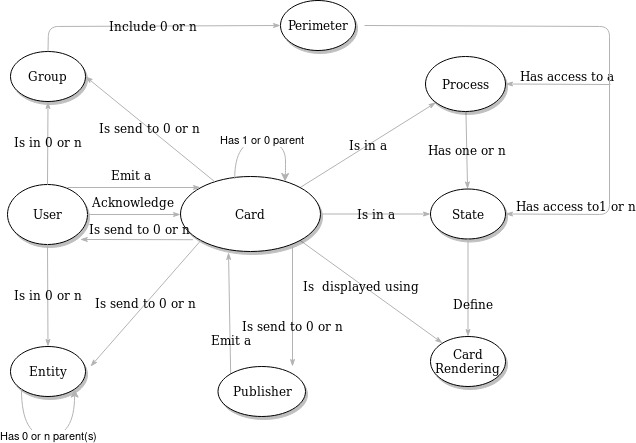

6.2. Business objects

The business objects can be represented as follows :

-

Card : the core business object which contains the data to show to the user(or operator)

-

Publisher : the emitter of the card (be it a third-party tool or an entity)

-

User : the operator receiving cards and responding via response cards

-

Entity : an entity (containing a list of users) , it can be used to model organizations (examples : control center, company , department… ) . An entity can be part of another entity or even of several entities.

-

Group : a group (containing a list of users) , it can be used to model roles in organizations (examples : supervisor, dispatcher … )

-

Process : the process the card is about

-

State : the step in the process

-

Perimeter : for a defined group the visibility of a card for a specific process and state

-

Card Rendering : data for card rendering

A card can have a parent card, in this case the card can be named child card.

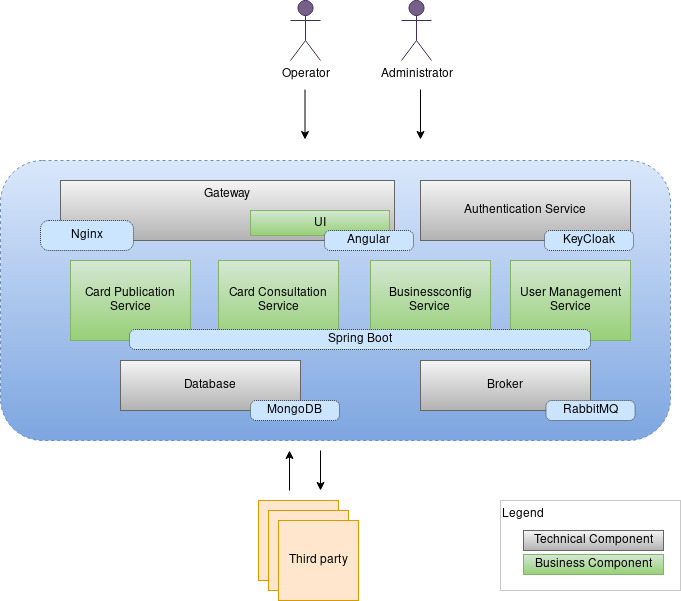

7. Technical Architecture

The architecture is based on independent modules. All business services are accessible via REST API.

7.1. Business components

We find here the business component seen before:

-

We have a "UI" component which stores the static pages and the UI code that is downloaded by the browser. The UI is based an Angular and Handlebars for the card templating.

-

The business component named "Card rendering and process definition" is at the technical level known as "Businessconfig service". This service receive card rendering and process definition as a bundle. The bundle is a tar.gz file containing

-

json process configuration file (containing states & actions)

-

templates for rendering

-

stylesheets

-

internationalization information

-

Except form the UI, which is based on angular, all business components are based on SpringBoot and packaged via Docker.

Spring WebFlux is used to provide the card in a fluid way.

7.2. Technical components

7.2.1. Gateway

It provides a filtered view of the APIS and static served pages for external access through browsers or other http compliant accesses. It provides the rooting for accessing the services from outside. It is a nginx server package with docker, this component contains the angular UI component.

7.2.2. Broker

The broker is used to share information asynchronously across the whole services. It is implemented via RabbitMQ

7.2.3. Authentication

The architecture provides a default authentication service via KeyCloak but it can delegate it to an external provider. Authentication is done through the use of Oauth2, three flows are supported : implicit, authorization code and password.

7.2.4. Database

The cards are stored in a MongoDb database. The bundles are stored in a file system.

OperatorFabric Reference Documentation

The aim of this document is to:

-

Explain what OperatorFabric is about and define the concepts it relies on

-

Give a basic tour of its features from a user perspective

8. Introduction

To perform their duties, an operator has to interact with multiple applications (perform actions, watch for alerts, etc.), which can prove difficult if there are too many of them.

The idea is to aggregate all the notifications from all these applications into a single screen, and to allow the operator to act on them if needed.

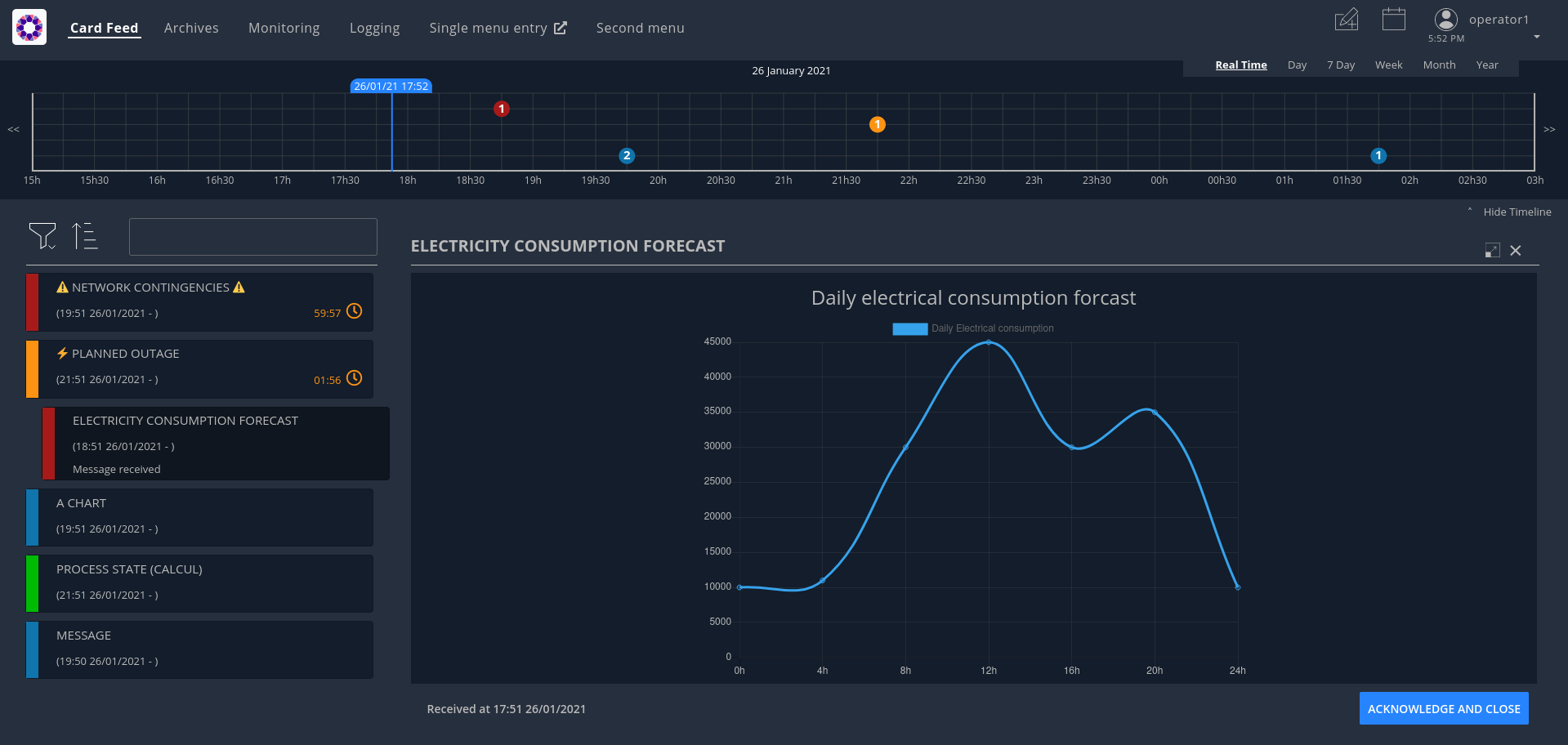

These notifications are materialized by cards sorted in a feed according to their period of relevance and their severity. When a card is selected in the feed, the right-hand pane displays the details of the card.

In addition, the cards will also translate as events displayed on a timeline at the top of the screen.

Part of the value of OperatorFabric is that it makes the integration very simple on the part of the third-party applications. To start publishing cards to users in an OperatorFabric instance, all they have to do is:

-

Register as a publisher through the "Businessconfig" service and provide a "bundle" containing handlebars templates defining how cards should be rendered, i18n info etc.

-

Publish cards as json containing card data through the card publication API

OperatorFabric will then:

-

Dispatch the cards to the appropriate users (by computing the actual users who should receive the card from the recipients rules defined in the card)

-

Take care of the rendering of the cards

-

Display relevant information from the cards in the timeline

A card is not only information, it could be question(s) the operator has to answer. When the operator is responding, a card is emitted to the sender of the initial card and the response could be seen by other operators.

It is also possible for users to directly send card to other users using predefined card templates.

9. Sending cards

The Cards Publication Service exposes a REST API through which third-party applications, or "publishers" can post cards to OperatorFabric. It then handles those cards:

-

Time-stamping them with a "publishDate"

-

Sending them to the message broker (RabbitMQ) to be delivered in real time to the appropriate operators

-

Persisting them to the database (MongoDB) for later consultation

9.1. Card Structure

Cards are represented as Json objects. The technical design of cards is described in

the cards api documentation

. A card correspond to the state of a Process in OperatorFabric.

9.1.1. Technical Information of the card

Those attributes are used by OperatorFabric to manage how cards are stored, to whom and when they’re sent.

9.1.1.1. Mandatory information

Below, the json technical key is in the '()' following the title.

Publisher (publisher)

The publisher field bears the identifier of the emitter of the card, be it an entity or an external service.

Process (process)

This field indicates which process the card is attached to. This information is used to resolve the presentation resources (bundle) used to render the card and card details.

Process Version (processVersion)

The rendering of cards of a given process can evolve over time. To allow for this while making sure previous cards

remain correctly handled, OperatorFabric can manage several versions of the same process.

The processVersion field indicate which version of the process should be used to retrieve the presentation resources

(i18n, templates, etc.) to render this card.

9.1.1.2. State in the process (state)

The card represent a specific state in the process. In addition to the process, this information is used to resolve the presentation resources used to render the card and card details.

Severity (severity)

The severity is a core principe of the OperatorFabric Card system. There are 4 severities available. A color is associated in the GUI to each severity. Here the details about severity and their meaning for OperatorFabric:

-

ALARM: represents a critical state of the associated process, need an action from the operator. In the UI, the card is red;

-

ACTION: the associated process need an action form operators in order to evolve correctly. In the UI, the card is orange;

-

COMPLIANT: the process related to the card is in a compliant status. In the UI, the card is green.;

-

INFORMATION: give information to the operator. In the UI, the card is blue.

9.1.1.3. Optional information

EntityRecipients (entityRecipients)

Used to send cards to entity : all users members of the listed entities who have the right for the process/state of the card will receive it.

GroupRecipients (groupRecipients)

Used to send cards to groups : all users members of the groups will receive it. If this field is used in conjunction with entityRecipients, to receive the cards :

-

users must be members of one of the entities AND one of the groups to receive the cards.

OR

-

users must be members of one of the entities AND have the right for the process/state of the card.

UserRecipients (userRecipients)

Used to send cards directly to users without using groups or entities for card routing.

Last Time to Decide (lttd)

Fixes the moment until when a response is possible for the card. After this moment, the response button won’t be useable. When lttd time is approaching, a clock is visible on the card in the feed with the residual time. The lttd time can be set for cards that don’t expect any response

SecondsBeforeTimeSpanForReminder (secondsBeforeTimeSpanForReminder)

Fixes the time for remind before the event define by the card see Card reminder

9.1.1.4. Business period

We define the business period as starting form startDate to endDate. The card will be visible on the UI if the business period overlap the user chosen period (i.e the period selected on the timeline). If endDate is not set, the card will be visible as soon as the startDate is between start and end date of the chosen period.

9.1.1.5. Store information

uid (uid)

Unique identifier of the card in the OperatorFabric system. This attribute can be sent with card, but by default it’s managed by OperatorFabric.

9.1.2. User destined Information of the card

There are two kind of User destined information in a card. Some are restricted to the card format, others are defined by the publisher as long as there are encoded in json format.

9.1.2.2. Custom part

Data (data)

Determines where custom information is store. The content in this attribute, is purely publisher choice.

This content, as long as it’s in json format can be used to display details. For the way the details are

displayed, see below.

| You must not use dot in json field names. In this case, the card will be refused with following message : "Error, unable to handle pushed Cards: Map key xxx.xxx contains dots but no replacement was configured!"" |

9.1.3. Presentation Information of the card

9.1.3.1. TimeSpans (timeSpans)

When the simple startDate and endDate are not enough to characterize your process business times, you can add a list of TimeSpan to your card. TimeSpans are rendered in the timeline component as cluster bubbles. This has no effect on the feed content.

example :

to display the card two times in the timeline you can add two TimeSpan to your card:

{

"publisher":"Dispatcher",

"publisherVersion":"0.1",

"process":"process",

"processInstanceId":"process-000",

"startDate":1546297200000,

"severity":"INFORMATION",

...

"timeSpans" : [

{"start" : 1546297200000},

{"start" : 1546297500000}

]

}

In this sample, the card will be displayed twice in the time line. The card start date will be ignored.

For timeSpans, you can specify an end date but it is not implemented in OperatorFabric (it was intended for future uses but it will be deprecated).

9.2. Cards Examples

Before detailing the content of cards, let’s show you what cards look like through few examples of json.

9.2.1. Minimal Card

The OperatorFabric Card specification defines mandatory attributes, but some optional attributes are needed for cards to be useful in OperatorFabric. Let’s clarify those point through few examples of minimal cards and what happens when they’re used as if.

9.2.1.1. Send to One User

The following card contains only the mandatory attributes.

{

"publisher":"Dispatcher",

"processVersion":"0.1",

"process":"process",

"processInstanceId":"process-000",

"state":"myState",

"startDate":1546297200000,

"severity":"INFORMATION",

"title":{"key":"card.title.key"},

"summary":{"key":"card.summary.key"},

"userRecipients": ["operator1"]

}

This an information about the process instance process-000 of process process, sent by Dispatcher. The title and the summary refer to i18n keys

defined in the associated i18n files of the process. This card is displayable since the first january 2019 and

should only be received by the user using the operator1 login.

9.2.1.2. Send to several users

Simple case (sending to a group)

The following example is nearly the same as the previous one except for the recipient.

{

"publisher":"Dispatcher",

"processVersion":"0.1",

"process":"process",

"processInstanceId":"process-000",

"state":"myState",

"startDate":1546297200000,

"severity":"INFORMATION",

"title":{"key":"card.title.key"},

"summary":{"key":"card.summary.key"},

"groupRecipients": ["Dispatcher"]

}

Here, the recipient is a group, the Dispatcher. So all users who are members of this group will receive the card.

Simple case (sending to a group and an entity)

The following example is nearly the same as the previous one except for the recipient.

{

"publisher":"Dispatcher",

"processVersion":"0.1",

"process":"process",

"processInstanceId":"process-000",

"state":"myState",

"startDate":1546297200000,

"severity":"INFORMATION",

"title":{"key":"card.title.key"},

"summary":{"key":"card.summary.key"},

"groupRecipients": ["Dispatcher"],

"entityRecipients" : ["ENTITY1"]

}

Here, the recipients are a group and an entity, the Dispatcher group and ENTITY1 entity. So all users who are both members

of this group and this entity will receive the card.

Simple case (sending to an entity)

The following example is nearly the same as the previous one except for the recipient.

{

"publisher":"Dispatcher",

"processVersion":"0.1",

"process":"process",

"processInstanceId":"process-000",

"state":"myState",

"startDate":1546297200000,

"severity":"INFORMATION",

"title":{"key":"card.title.key"},

"summary":{"key":"card.summary.key"},

"entityRecipients" : ["ENTITY1"]

}

Here, the recipient is an entity and there is no more groups. So all users who has the right perimeter and who are members of this entity will receive the card. More information on perimeter can be found in <<'users_management,user documentation'>>

Complex case

If this card need to be viewed by a user who is not in the Dispatcher group, it’s possible to tune more precisely the

definition of the recipient. If the operator2 needs to see also this card, the recipient definition could be(the following code details only the recipient part):

"groupRecipients": ["Dispatcher"], "userRecipients": ["operator2"]

So here, all the users of the Dispatcher group will receive the INFORMATION as should the tos2-operator user.

Another example, if a card is destined to the operators of Dispatcher and Planner and needs to be also seen by the admin, the recipient configuration looks like:

"groupRecipients": ["Dispatcher", "Planner"], "userRecipients": ["admin"]

There is and alternative way to declare recipients of card, this syntax is more complex and will be deprecated:

"recipient":{

"type":"UNION",

"recipients":[

{ "type": "GROUP", "identity":"Dispatcher"},

{ "type": "USER", "identity":"operator2"}

]

}

9.2.2. Regular Card

The previous cards were nearly empty regarding information carrying. In fact, cards are intended to contain more information than a title and a summary. The optional attribute data is here for that. This attribute is destined to contain any json object. The creator of the card is free to put any information needed as long as it’s in a json format.

9.2.2.1. Full of Hidden data

For this example we will use our previous example for the Dispatcher group with a data attribute containing the definition of a json object containing two attributes: stringExample and numberExample.

{

"publisher":"Dispatcher",

"processVersion":"0.1",

"process":"process",

"processInstanceId":"process-000",

"state":"myState",

"startDate":1546297200000,

"severity":"INFORMATION",

"title":{"key":"card.title.key"},

"summary":{"key":"card.summary.key"},

"userRecipients": ["operator1"],

"data":{

"stringExample":"This is a not so random string of characters.",

"numberExample":123

}

}

This card contains some data but when selected in the feed nothing more than the previous example of card happen because there is no rendering configuration.

9.2.2.2. Fully useful

When a card is selected in the feed (of the GUI), the data is displayed in the detail panel.

The way details are formatted depends on the template contained in the bundle associated with the process as

described here

. To have an effective example without to many actions to performed, the following example will use an already existing

configuration.The one presents in the development version of OperatorFabric, for test purpose(TEST bundle).

At the card level, the attributes in the card telling OperatorFabric which template to use are the process and state attributes, the templateName can be retrieved from the definition of the bundle.

{

"publisher":"TEST_PUBLISHER",

"processVersion":"1",

"process":"TEST",

"processInstanceId":"process-000",

"state":"myState",

"startDate":1546297200000,

"severity":"INFORMATION",

"title":{"key":"process.title"},

"summary":{"key":"process.summary"},

"userRecipients": ["operator1"],

"data":{"rootProp":"Data displayed in the detail panel"},

}

So here a single custom data is defined and it’s rootProp. This attribute is used by the template called by the templateName attribute.

10. Card rendering

As stated above, third applications interact with OperatorFabric by sending cards.

The Businessconfig service allows them to tell OperatorFabric for each process how these cards should be rendered including translation if several languages are supported. Configuration is done via files zipped in a "bundle", these files are send to OperatorFabric via a REST end point.

In addition, it lets third-party applications define additional menu entries for the navbar (for example linking back to the third-party application) that can be integrated either as iframe or external links.

10.1. Process: Declaration and Configuration

To declare and configure a Process, OperatorFabric uses bundles.

This section describes their content and how to use them.

An OperatorFabric Process is a way to define a business configuration.

Once this bundle fully created, it must be uploaded to the server through the Businessconfig service.

Some examples show how to configure a process using a bundle before diving in more technical details of the configuration.

The following instructions describe tests to perform on OperatorFabric to understand how customizations are possible.

The cards used in the examples of this section are automatically sent by a script described

here

10.1.1. Bundle as Process declaration

A bundle contains all the configuration regarding a given business process, describing for example the various steps of the process but also how the associated cards and card details should be displayed.

Bundle are technically tar.gz archives containing at least a descriptor file named config.json.

To display the card date, some css files, i18n files and handlebars templates must be added.

For didactic purposes, in this section, the businessconfig bundle name is BUNDLE_TEST (to match the parameters used by the script).

The l10n (localization) configurations are english, referred as en and french, referred as fr.

As detailed in the Businessconfig core service README the bundle contains at least a metadata file called config.json, a css folder, an i18n folder and a template folder.

Except for the config.json file, all elements are optional.

The file organization within a bundle:

bundle

├── config.json

├── css

│ └── bundleTest.css

├── i18n

│ ├── en.json

│ └── fr.json

└── template

├── en

│ ├── template1.handlebars

│ └── template2.handlebars

└── fr

├── template1.handlebars

└── template2.handlebars

10.1.2. The config.json file

It’s a description file in json format.

It lists the content of the bundle.

example

{

"id": "TEST",

"version": "1",

"uiVisibility": {

"monitoring": true,

"logging": true,

"calendar": true

},

"name": "process.label",

"defaultLocale": "fr",

"states": {

"firstState": {

"name": "state.label",

"color": "blue",

"templateName": "operation",

"acknowledgmentAllowed": "Never"

}

}

}-

id: id of the process; -

name: process name (i18n key); -

version: enables the correct display of the card data, even for the old ones. The server store the previous versions in its file system. This field value should match a businessconfig configuration for a correct rendering; -

states: lists the available states which each declares associated actions, associated templates and if cards could be acknowledged by users;

The mandatory field are id,'name' and version.

See the Businessconfig API documentation for details.

10.1.3. i18n

There are two ways of internationalization (i18n) for businessconfig service.

First one by using l10n files located in the i18n folder, the second one throughout l10n name folder nested in the template folder.

The i18n folder contains one json file per l10n.

The integration of the businessconfig service into OperatorFabric need this localisation, i.e. the label displayed for the process, the state, the label displayed for the details of the card…

10.1.3.1. Template folder

The template folder must contain l10n folder for the i18n of the card details.

This is why in our example, as the bundle contains an en and a fr l10n, the template folder contains an en and a fr folder.

i18n file

If there is no i18n file or key is missing, OperatorFabric displays i18n key, such as BUNDLE_TEST.1.missing-i18n-key.

In the case where the bundle declares no i18n key corresponds to missing-i18n-key.

The choice of i18n keys is up to the maintainer of the Businessconfig process.

Example

For this example, the name of the process is Bundle Test and its technical name is BUNDLE_TEST.

The bundle provides an english and a french l10n.

Title and summary have to be localized.

Here is the content of en.json

{

"TEST": {

"title": "Test: Process {{value}}",

"summary": "This sums up the content of the card: {{value}}",

"detail": {

"title": "card title"

}

},

"process": {

"label": "Test Process"

},

"state": {

"label": "Test State"

},

"template": {

"title": "Asset details"

}

}Here the content of fr.json

{

"TEST":{

"title": "Test: Processus {{value}}",

"summary": "Cela résume la carte: {{value}}",

"detail": {

"title": "Titre de la carte"

}

},

"process":{

"label": "Processus de test"

},

"state": {

"label": "State de test"

},

"menu":{

"label": "Menu du Processus de Test",

"first":"Documentation Operator Fabric",

"second":"LF Energy"

},

"template": {

"title": "Onglet TEST"

}

}To check the i18n, after the upload of the bundle, use a GET request against the businessconfig service.

The simpler is to ask for the i18n file, as described

here

.

Set the locale language, the version of the bundle and the technical name of the businessconfig party to get json in the response.

For example, to check if the french l10n data of the version 1 of the BUNDLE_TEST businessconfig party use the following command line:

curl "http://localhost:2100/businessconfig/processes/BUNDLE_TEST/i18n?locale=fr&version=1" \

-H "Authorization: Bearer ${token}"

where ${token} is a valid token for operatorfabric use.

The businessconfig service should answer with a 200 status associated with the following json:

{

"TEST": {

"title": "Test: Process {{value}}",

"summary": "This sums up the content of the card: {{value}}",

"detail": {

"title": "card title"

}

},

"process": {

"label": "Test Process"

},

"state": {

"label": "Test State"

},

"template": {

"title": "Asset details"

}

}10.1.3.2. Processes and States

Each Process declares associated states. Each state declares specific templates for card details and specific actions.

For question cards, you can choose to display the card header or not. This header contains the list of entities

that have already responded or not, and a countdown indicating the time remaining to respond, if necessary.

This setting is done using the showDetailCardHeader variable, in the definition of

the process state, in config.json file.

The purpose of this section is to display elements of businessconfig card data in a custom format.

Regarding the card detail customization, all the examples in this section refer to the cards generated by the script existing in the Cards-Publication project.

For the examples given here, run this script with arguments detailed in the following command line:

$OPERATOR_FABRIC_HOME/services/core/cards-publication/src/main/bin/push_card_loop.sh BUNDLE_TEST tests

where:

-

$OPERATOR_FABRIC_HOMEis the root folder of OperatorFabric used to perform tests; -

BUNDLE_TESTis the name of the Businessconfig party; -

testsis the name of the process referred by published cards.

configuration

The process entry in the configuration file is a dictionary of processes, each key maps to a process definition. A process definition is itself a dictionary of states, each key maps to a state definition.

Templates

For demonstration purposes, there will be two simple templates.

For more advance feature go to the section detailing the handlebars templates and associated helpers available in OperatorFabric.

As the card used in this example are created

above

, the bundle template folder needs to contain 2 templates: template1.handlebars and template2.handlebars.

Examples of template (i18n versions)

The following templates display a l10n title and a line containing the value of the scope property card.level1.level1Prop.

In english, the translation of this key is 'This is a root property'.

/template/en/template1.handlebars

<h2>Template Number One</h2>

<div class="bundle-test">'{{card.data.level1.level1Prop}}'</div>/template/fr/template1.handlebars

<h2>Patron numéro Un</h2>

<div class="bundle-test">'{{card.data.level1.level1Prop}}'</div>The two following template examples display also a l10n title and a list of numeric values from 1 to 3.

/template/en/template2.handlebars

<h2>Second Template</h2>

<ul class="bundle-test-list">

{{#each card.data.level1.level1Array}}

<li class="bunle-test-list-item">{{this.level1ArrayProp}}</li>

{{/each}}

</ul>/template/fr/template2.handlebars

<h2>Second patron</h2>

<ul class="bundle-test-list">

{{#each card.data.level1.level1Array}}

<li class="bunle-test-list-item">{{this.level1ArrayProp}}</li>

{{/each}}

</ul>CSS

This folder contains regular css files.

The file name must be declared in the config.json file in order to be used in the templates and applied to them.

As above, all parts of files irrelevant for our example are symbolised by a … character.

Declaration of css files in config.json file

{

…

"states" : {

"state1" : {

…

"styles":["bundleTest"]

}

}

…

}CSS Class used in ./template/en/template1.handlebars

…

<div class="bundle-test">'{{card.data.level1.level1Prop}}'</div>

…As seen above, the value of {{card.data.level1.level1Prop}} of a test card is This is a level1 property

Style declaration in ./css/bundleTest.css

.h2{

color:#fd9312;

font-weight: bold;

}Expected result

10.1.3.3. Upload

To upload a bundle to the OperatorFabric server use a POST http request as described in the

Businessconfig Service API documentation

.

Example

cd ${BUNDLE_FOLDER}

curl -X POST "http://localhost:2100/businessconfig/processes"\

-H "accept: application/json"\

-H "Content-Type: multipart/form-data"\

-F "file=@bundle-test.tar.gz;type=application/gzip"Where:

-

${BUNDLE_FOLDER}is the folder containing the bundle archive to be uploaded. -

bundle-test.tar.gzis the name of the uploaded bundle.

These command line should return a 200 http status response with the details of the bundle in the response body such as :

{

"id":"BUNDLE_TEST"

"name": "BUNDLE_TEST",

"version": "1",

"states" : {

"start" : {

"templateName" : "template1"

},

"end" : {

"templateName" : "template2",

"styles" : [ "bundleTest.css" ]

}

}

}For further help check the Troubleshooting section which resumes how to resolve common problems.

10.1.4. Processes groups

OperatorFabric offers the possibility of defining process groups. These groups have an impact only on the UI, for example on the notification configuration screen, by offering a more organized view of all the processes.

| A process can only belong to one process group. |

To define processes groups, you have to upload a file via a POST http request as described in the

Example

cd ${PROCESSES_GROUPS_FOLDER}

curl -X POST "http://localhost:2100/businessconfig/processgroups"\

-H "accept: application/json"\

-H "Content-Type: multipart/form-data"\

-F "file=@processesGroups.json"\

-H "Authorization: Bearer ${token}"Where:

-

${PROCESSES_GROUPS_FOLDER}is the folder containing the processes groups file to upload. -

processesGroups.jsonis the name of the uploaded file. -

${token}is a valid token for OperatorFabric use.

Example of content for uploaded file :

{

"groups": [

{

"id": "processgroup1",

"processes": [

"process1",

"process2"

]

},

{

"id": "processgroup2",

"processes": [

"process3",

"process4"

]

}

],

"locale": {

"en": {

"processgroup1": "Process Group 1",

"processgroup2": "Process Group 2"

},

"fr": {

"processgroup1": "Groupe de process 1",

"processgroup2": "Groupe de process 2"

}

}

}These command line should return a 201 http status.

10.2. Templates

Templates are Handlebars template files. Templates are then filled with data coming from two sources:

-

a card property (See card data model for more information)

-

a userContext :

-

login: user login

-

token: user jwt token

-

firstName: user first name

-

lastName: user last name

-

In addition to Handlebars basic syntax and helpers, OperatorFabric defines the following helpers :

10.2.1. OperatorFabric specific handlebars helpers

10.2.1.1. arrayContains

Verify if an array contains a specified element. If the array does contain the element, it returns true. Otherwise, it returns false.

<p {{#if (arrayContains colors 'red')}}class="text-danger"{{/if}}>test</p>

If the colors array contains 'red', the output is:

<p class="text-danger">test</p>

10.2.1.2. arrayContainsOneOf

If the first array contains at least one element of the second array, return true. Otherwise, return false.

{{#if (arrayContainsOneOf arr1 arr2)}}

<p>Arr1 contains at least one element of arr2</p>

{{/if}}

10.2.1.3. bool

returns a boolean result value on an arithmetical operation (including object equality) or boolean operation.

Arguments: - v1: left value operand - op: operator (string value) - v2: right value operand

arithmetical operators:

-

==

-

===

-

!=

-

!==

-

<

-

⇐

-

>

-

>=

boolean operators:

-

&&

-

||

examples:

{{#if (bool v1 '<' v2)}}

v1 is strictly lower than v2

{{else}}

V2 is lower or equal to v1

{{/if}}

10.2.1.4. conditionalAttribute

Adds the specified attribute to an HTML element if the given condition is truthy.

This is useful for attributes such as checked where it is the presence or absence of the attribute that matters (i.e.

an checkbox with checked=false will still be checked).

<input type="checkbox" id="optionA" {{conditionalAttribute card.data.optionA 'checked'}}></input>

10.2.1.5. dateFormat

formats the submitted parameters (millisecond since epoch) using mement.format. The locale used is the current user selected one, the format is "format" hash parameter (see Handlebars doc Literals section).

{{dateFormat card.data.birthday format="MMMM Do YYYY, h:mm:ss a"}}

10.2.1.6. i18n

outputs a i18n result from a key and some parameters. There are two ways of configuration :

-

Pass an object as sole argument. The object must contain a key field (string) and an optional parameter field (map of parameterKey ⇒ value)

{{i18n card.data.i18nTitle}} -

Pass a string key as sole argument and use hash parameters (see Handlebars doc Literals section) for i18n string parameters.

<!--

emergency.title=Emergency situation happened on {{date}}. Cause : {{cause}}.

-->

{{i18n "emergency.title" date="2018-06-14" cause="Broken Coffee Machine"}}

outputs

Emergency situation happened on 2018-06-14. Cause : Broken Cofee Machine

10.2.1.7. json

Convert the element in json, this can be useful to use the element as a javascript object in the template. For example :

var myAttribute = {{json data.myAttribute}};

10.2.1.8. keepSpacesAndEndOfLine

Convert a string to a light HTML by replacing :

-

each new line character with <br/>

-

spaces with when there is at least two consecutive spaces.

10.2.1.9. keyValue

This allows to traverse a map.

Notice that this should normally be feasible by using the built-in each helper, but a client was having some troubles using it so we added this custom helper.

{{#keyValue studentGrades}}

<p>{{key}}: {{value}}</p>

{{/keyValue}}

If the value of the studentGrades map is:

{

'student1': 15,

'student2': 12,

'student3': 9

}

The output will be:

<p>student1: 15</p> <p>student2: 12</p> <p>student3: 9</p>

10.2.1.10. math

returns the result of a mathematical operation.

arguments:

-

v1: left value operand

-

op: operator (string value)

-

v2: right value operand

arithmetical operators:

-

+

-

-

-

*

-

/

-

%

example:

{{math 1 '+' 2}}

10.2.1.11. mergeArrays

Return an array that is a merge of the two arrays.

{{#each (mergeArrays arr1 arr2)}}

<p>{{@index}} element: {{this}}</p>

{{/each}}

10.2.1.12. now

outputs the current date in millisecond from epoch. The date is computed from application internal time service and thus may be different from the date that one can compute from javascript api which relies on the browsers' system time.

NB: Due to Handlebars limitation you must provide at least one argument to helpers otherwise, Handlebars will confuse a helper and a variable. In the bellow example, we simply pass an empty string.

example:

<div>{{now ""}}</div>

<br>

<div>{{dateFormat (now "") format="MMMM Do YYYY, h:mm:ss a"}}</div>

outputs

<div>1551454795179</div> <br> <div>mars 1er 2019, 4:39:55 pm</div>

for a local set to FR_fr

10.2.1.13. numberFormat

formats a number parameter using developer.mozilla.org/fr/docs/Web/JavaScript/Reference/Objets_globaux/Nu mberFormat[Intl.NumberFormat]. The locale used is the current user selected one, and options are passed as hash parameters (see Handlebars doc Literals section).

{{numberFormat card.data.price style="currency" currency="EUR"}}

10.2.1.14. preserveSpace

preserves space in parameter string to avoid html standard space trimming.

{{preserveSpace card.data.businessId}}

10.2.1.15. slice

extracts a sub array from ann array

example:

<!--

{"array": ["foo","bar","baz"]}

-->

<ul>

{{#each (slice array 0 2)}}

<li>{{this}}</li>

{{/each}}

</ul>

outputs:

<ul> <li>foo</li> <li>bar</li> </ul>

and

<!--

{"array": ["foo","bar","baz"]}

-->

<ul>

{{#each (slice array 1)}}

<li>{{this}}</li>

{{/each}}

</ul>

outputs:

<ul> <li>bar</li> <li>baz</li> </ul>

10.2.1.16. sort

sorts an array or some object’s properties (first argument) using an optional field name (second argument) to sort the collection on this fields natural order.

If there is no field argument provided :

-

for an array, the original order of the array is kept ;

-

for an object, the structure is sorted by the object field name.

<!--

users :

{"john": { "firstName": "John", "lastName": "Cleese"},

"graham": { "firstName": "Graham", "lastName": "Chapman"},

"terry": { "firstName": "Terry", "lastName": "Gilliam"},

"eric": { "firstName": "Eric", "lastName": "Idle"},

"terry": { "firstName": "Terry", "lastName": "Jones"},

"michael": { "firstName": "Michael", "lastName": "Palin"},

-->

<ul>

{{#each (sort users)}}

<li>{{this.firstName}} {{this.lastName}}</li>

{{/each}}

</ul>

outputs :

<ul> <li>Eric Idle</li> <li>Graham Chapman</li> <li>John Cleese</li> <li>Michael Pallin</li> <li>Terry Gilliam</li> <li>Terry Jones</li> </ul>

and

<ul>

{{#each (sort users "lastName")}}

<li>{{this.firstName}} {{this.lastName</li>

{{/each}}

</ul>

outputs :

<ul> <li>Graham Chapman</li> <li>John Cleese</li> <li>Terry Gilliam</li> <li>Eric Idle</li> <li>Terry Jones</li> <li>Michael Pallin</li> </ul>

10.2.1.17. split

splits a string into an array based on a split string.

example:

<ul>

{{#each (split 'my.example.string' '.')}}

<li>{{this}}</li>

{{/each}}

</ul>

outputs

<ul> <li>my</li> <li>example</li> <li>string</li> </ul>

10.2.1.18. svg

outputs a svg tag with lazy loading, and missing image replacement message. The image url is the concatenation of an arbitrary number of helper arguments

{{{svg baseUri scheduledOpId "/" substation "/before/"

computationPhaseOrdinal}}}

10.2.2. OperatorFabric css styles

OperatorFabric defines a css class that you should use so your templates don’t clash with the rest of the OperatorFabric look and feel:

-

opfab-input : input field

-

opfab-textarea : text area input field

-

opfab-select : select input field

-

opfab-radio-button : radio button input field

-

opfab-checkbox : checkbox input field

-

opfab-table : a HTML table

-

opfab-border-box : a box with a label

These styles are especially useful for templates used in user card or card with responses.

Your can find example using these classes in the OperatorFabric core repository (src/test/resources/bundles).

10.2.3. Charts

The library charts.js is integrated in OperatorFabric, it means it’s possible to show charts in cards, you can find a bundle example in the operator fabric git (src/test/resources/bundle/defaultProcess_V1).

10.2.4. OperatorFabric specific functions

10.2.4.1. Set screen size

To adapt the template content on screen size it is possible to receive from OperatorFabric information on the size of the window where the template will be rendered. To receive screen size information you need to implement a javascript function in your template called templateGateway.setScreenSize(size) which receives as input a string parameter with one of the following values :

-

'md' : medium size window

-

'lg' : large size window

10.2.4.2. Redirect to business application from a card

It’s possible to redirect from a card to a business application declared in ui-menu.json.

This can be done by calling the following function from the template :

templateGateway.redirectToBusinessMenu(idMenu,idEntry)

-

idMenu is the id of the menu defined in ui-menu.json

-

idEntry is the id of the entry defined in ui-menu.json

It is also possible to append parameters to the url that will be called:

templateGateway.redirectToBusinessMenu('myMenu','myEntry','param1=aParam¶m2=anotherParam')

For example:

<a href="javascript:templateGateway.redirectToBusinessMenu('menu1','uid_test_0','search=chart&fulltext=1')"> Want more information about charts ? </a>This can be useful to pass context from the card to the business application.

11. Card notification

When a user receives a card, he is notified via a resume of the card on the left panel of the UI, what is called the "feed".

11.1. Notification configuration

For each process/state, the user can choose to be notified or not when receiving a card. If he chooses not to be notified then the card will not be visible in the feed. However, it will be visible in the archives screen.

The notification configuration screen can be made accessible or not for the OperatorFabric instance via the variable feedConfiguration.hidden in the web-ui.json file.

In order to have a better visual organization of the processes in the UI, you can define processes groups. You can find more information here

11.2. Sound notification

If the option is activated in the general configuration file web-ui.json, the user can choose to have a sound when a card is arriving. The configuration is to be set by the user with the settings menu.

11.3. Card read

When the user receives a new card, he see it in the feed with an eye icon on the right of the card resume. When he opens the cards, the icon disappears.

11.4. Card acknowledgment

The user can set a card as "acknowledged" so he will not see it anymore by default in the feed. It is as well possible to cancel it and set a card to "unacknowledged" (a filter permit to see acknowledged cards).

To offer the possibility for the user to acknowledged card, it has to be configured in process definition. The configuration is done on a state by setting the acknowledgmentAllowed field. Allowed values are:

-

"Never": acknowledgement not allowed (default value)

-

"Always": acknowledgement allowed

-

"OnlyWhenResponseDisabledForUser": acknowledgement allowed only when the response is disabled for the user

You can see examples in src/test/resources/bundles/defaultProcess_V1/config.json

11.5. Card reminder

For certain process and state, it is possible to configure a reminder. The reminder "reactivate" the card in the feed at a certain time. "Reactivate" means setting the card to the status "unread" and "unacknowledged".

The time for "reactivation" is defined with the parameter "secondsBeforeTimeSpanForReminder" in the card.

The remind is done related to the timespans values :

-

the startDate

-

or recurrently if a recurrence objet is defined.

11.5.1. Simple reminder

If a timespan is present without a recurrence object, a reminder will arise at startDate - secondsBeforeTimeSpanForReminder.

11.5.2. Recurrent reminder

It is possible to set a recurrent reminder via the structure recurrence which define a regular event in the timespan structure. It is defined with the following fields :

-

HoursAndMinutes : hours and minutes of day when the event arise

-

DaysOfWeek : a list of day of the week when the event arise. The day of week is a number with 1 being Monday and 7 being Sunday as defined in the ISO Standard 8601 (weekday number)

-

TimeZone : the time zone of reference for the recurrence definition (default value is Europe/Paris)

-

DurationInMinutes : the duration in minutes of the event

The reminder will arise for each recurrent date of event - secondsBeforeTimeSpanForReminder starting from startDate.

11.5.2.1. Recurrent reminder example

If timespan is defined as follow :

startDate : 1231135161

recurrence : {

hoursAndMinutes : { hours:10 ,minutes:30},

daysOfWeek : [6,7],

durationInMinutes : 15

}

If secondsBeforeTimeSpanForReminder is set to 600 seconds, the reminder will arise every saturday and sunday at 10:20 starting from startDate

11.5.3. Last time for reminding

If the user is not connected at the time of the remind, when he connects if current time is superior to 15 minutes from the event date, no remind will arise.

11.5.4. Debugging

When the user receives a card with a reminder to set, the log (console) of the browser contains a line with the date when the remind will arise . For example :

2020-11-22T21:00:36.011Z Reminder Will remind card userCardExamples.0cf5537b-f0df-4314-f17f-2797ccd8e4e9 at

Sun Nov 22 2020 22:55:00 GMT+0100 (heure normale d’Europe centrale)

12. Response cards

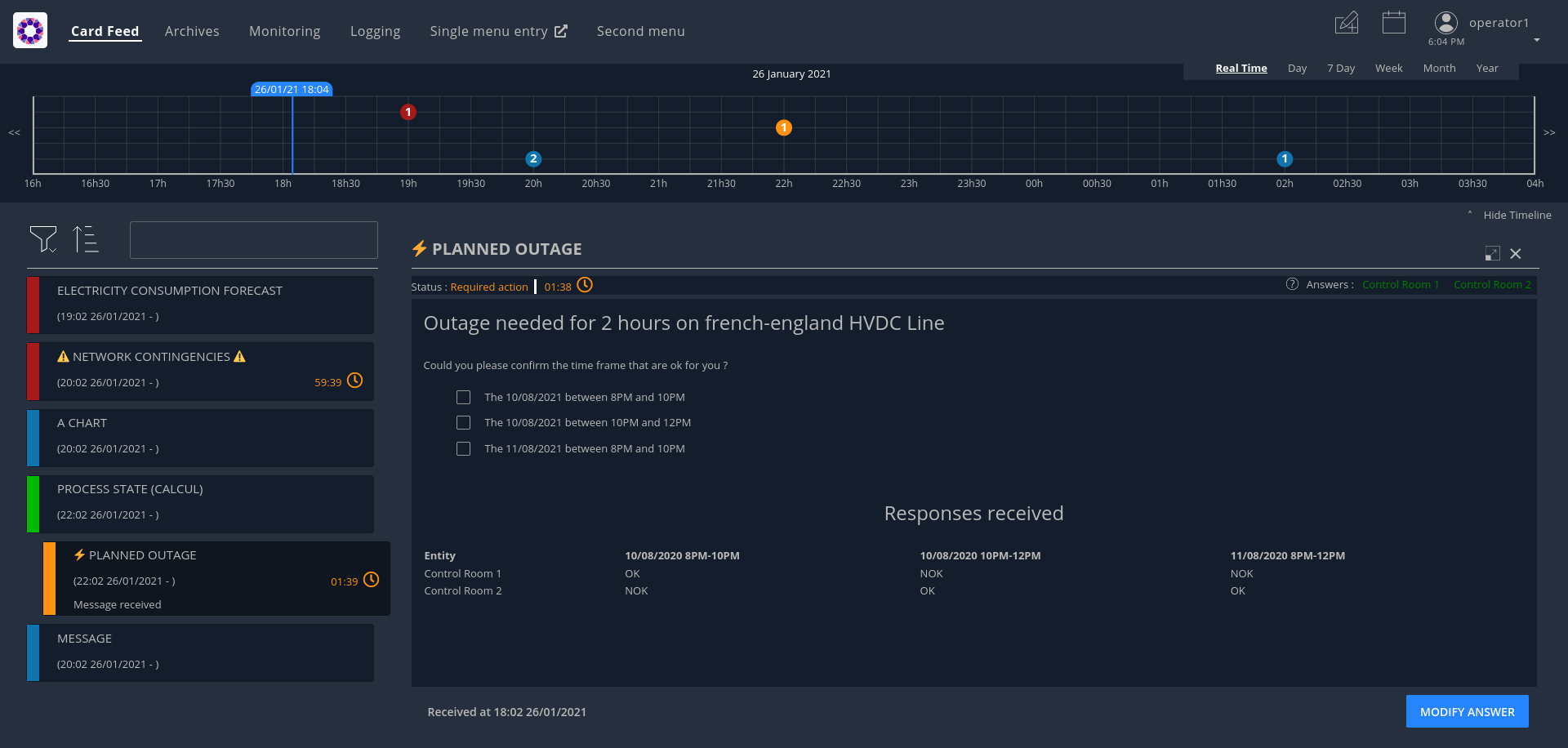

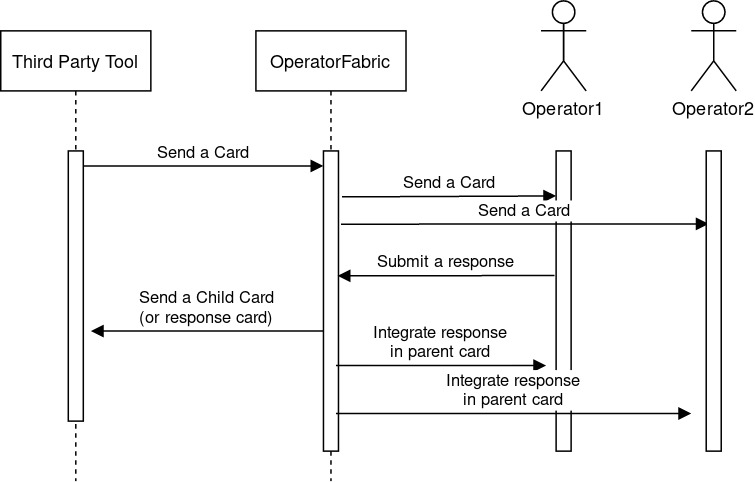

Within your template, you can allow the user to perform some actions (respond to a form, answer a question, …). The user fills these information and then clicks on a submit button. When he submits this action, a new card is created and emitted to a third-party tool.

This card is called "a child card" as it is attached to the card where the question came from : "the parent card". This child card is also sent to the users that have received the parent card. From the ui point of view, the information of the child cards can be integrated in real time in the parent card if configured.

The process can be represented as follows :

Notice that the response will be associated to the entity and not to the user, i.e the user responds on behalf of his entity. A user can respond more than one time to a card (a future evolution could add the possibility to limit to one response per entity).

You can view a screenshot of an example of card with responses :

12.1. Steps needed to use a response card

12.1.1. Define a third party tool

The response card is to be received by a third party application for business processing. The third-party application will receive the card as an HTTP POST request. The card is in json format (the same format as when we send a card). The field data in the json contains the user response.

The url of the third party receiving the response card is to be set in the .yml of the publication service. Here is an example with two third parties configured.

externalRecipients-url: "{\

third-party1: \"http://thirdparty1/test1\", \

third-party2: \"http://thirdparty2:8090/test2\", \

}"

The name to use for the third-party is the publisherId of the parent card.

|

For the url, do not use localhost if you run OperatorFabric in a docker, as the publication-service will not be able to join your third party. |

12.1.2. Configure the response in config.json

A card can have a response only if it’s in a process/state that is configured for. To do that you need to define the appropriate configuration in the config.json of the concerned process. Here is an example of configuration:

{

"id": "defaultProcess",

"name": "Test",

"version": "1",

"states": {

"questionState": {

"name": "question.title",

"color": "#8bcdcd",

"response": {

"state": "responseState",

"externalRecipients":["externalRecipient1", "externalRecipient2"],

"btnColor": "GREEN",

"btnText": {

"key": "question.button.text"

}

},

"templateName": "question",

"styles": [

"style"

],

"acknowledgmentAllowed": "Never"

}

}

}

We define here a state name "questionState" with a response field. Now, if we send a card with process "defaultProcess" and state "questionState", the user will have the possibility to respond if he has the required privileges.

-

The field "state" in the response field is used to define the state to use for the response (the child card).

-

The field "externalRecipients" define the recipients of the response card. These recipients are keys referenced in the config file of cards-publication service, in "externalRecipients-url" element. This field is optional.

-

The field "btnColor" define the color of the submit button for the response, it is optional and there are 3 possibilities : RED , GREEN , YELLOW

-

The field "btnText" is the i18n key of the title of the submit button, it is optional.

The state to be used for the response can also be set dynamically based on the contents of the card or the

response by returning it in the templateGateway.getUserResponse method (see below for details).

|

12.1.3. Design the question form in the template

For the user to response you need to define the response form in the template with standard HTML syntax

To enable operator fabric to send the response, you need to implement a javascript function in your template called

templateGateway.getUserResponse which returns an object containing four fields :

-

valid (boolean) : true if the user input is valid

-

errorMsg (string) : message in case of invalid user input. If valid is true this field is not necessary.

-

responseCardData (any) : the user input to send in the data field of the child card. If valid is false this field is not necessary.

-

responseState : name of the response state to use. This field is not mandatory, if it is not set the state defined in

config.jsonwill be used for the response.

This method will be called by OperatorFabric when the user clicks on the button to send the response.

In the example below, the getUserResponse creates a responseCardData object by retrieving the user’s inputs from the HTML.

In addition, if the user chose several options, it overrides the response state defined in the config.json with another

state.

templateGateway.getUserResponse = function() {

const responseCardData = {};

const formElement = document.getElementById('question-form');

for (const [key, value] of [... new FormData(formElement)]) {

(key in responseCardData) ? responseCardData[key].push(value) : responseCardData[key] = [value];

}

const result = {

valid: true,

responseCardData: responseCardData

};

// If the user chose several options, we decide to move the process to a specific state, for example to ask a follow-up question (what's their preferred option).

const choiceRequiresFollowUp = Object.entries(responseCardData).length>1;

if(choiceRequiresFollowUp) result['responseState'] = 'multipleOptionsResponseState';

return result;

};12.1.4. Define permissions

To respond to a card a user must have the right privileges, it is done using "perimeters". The user must be in a group that is attached to a perimeter with a right "Write" for the concerned process/state, the state being the response state defined in the config.json.

Here is an example of definition of a perimeter :

{

"id" : "perimeterQuestion",

"process" : "defaultProcess",

"stateRights" : [

{

"state" : "responseState",

"right" : "Write"

}

]

}

To configure it in OperatorFabric , you need to make a POST of this json file to the end point /users/perimeters.

To add it to a group name for example "mygroup", you need to make a PATCH request to endpoint 'users/groups/mygroup/perimeters' with payload ["perimeterQuestion"]

| If you don’t want OperatorFabric to check for user perimeter when responding to a card, you can add the variable "checkPerimeterForResponseCard" and set it to false, in the config file of cards-publication and in web-ui.json. |

12.2. Send a question card

The question card is like a usual card except that you have the field "entitiesAllowedToRespond" to set with the entities allowed to respond to the card. If the user is not in the entity, he will not be able to respond.

... "process" :"defaultProcess", "processInstanceId" : "process4", "state": "questionState", "entitiesAllowedToRespond": ["ENTITY1","ENTITY2"], "severity" : "ACTION", ...

| By default, OperatorFabric considers that if the parent card (question card) is modified, then the child cards are deleted. If you want to keep the child cards when the parent card is changed, then you must add in the parent card the field "keepChildCards" and set it to true. |

The header in the card details will list the entities from which a response is expected, color-coding them depending on whether they’ve already responded (green) or not (orange).

You can also set the property entitiesRequiredToRespond to differentiate between entities can respond

(entitiesAllowedToRespond) and those who must respond (entitiesRequiredToRespond).

|

... "process" :"defaultProcess", "processInstanceId" : "process4", "state": "questionState", "entitiesAllowedToRespond": ["ENTITY1","ENTITY2","ENTITY3"], "entitiesRequiredToRespond": ["ENTITY1","ENTITY2"], "severity" : "ACTION", ...

If entitiesRequiredToRespond is set and not empty, the card detail header will use this list instead of

entitiesAllowedToRespond.

If set, entitiesRequiredToRespond does not have to be a subset of entitiesAllowedToRespond. To determine

if a user has the right to respond, OperatorFabric consider the union of the two lists.

|

| If several entities are allowed/required to respond and if the user is part of several of these entities, so the ability for the user to respond to this card is desactivated. |

12.3. Integrate child cards